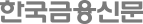

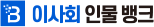

Naver Cloud CEO Kim Yoo-won introduces lightweight and inference models based on HyperCLOVA X at the Tech Summit held on the 23rd. / Photo courtesy of Naver Cloud

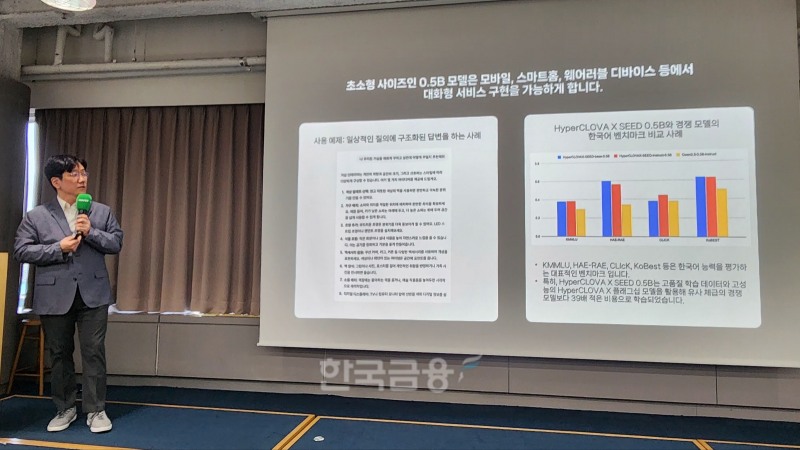

이미지 확대보기On April 23, Naver Cloud hosted a technical seminar titled “Tech Meetup” at Naver Square in Yeoksam, Gangnam, Seoul. During the event, the company shared updates on its ongoing development of an inference model based on HyperCLOVA X and outlined the release schedule. Naver Cloud CEO Kim Yoo-won and Head of Hyperscale AI Technology Seong Nak-ho were in attendance.

CEO Kim stated, “We plan to unveil our inference model, which is being developed based on the flagship version of HyperCLOVA X, within the first half of this year.” He added, “This model not only enhances accuracy in core inference domains like mathematics and programming, but also integrates and advances capabilities such as visual and auditory understanding, automated web search, API calls, and data analysis.”

Inference AI refers to systems that autonomously process and reason through user queries or challenges. For example, when a prompt like “Can you recommend family-friendly tourist spots in Seogwipo, Jeju and book a well-reviewed hotel?” is input into the HyperCLOVA X inference model, it generates a structured response through independent reasoning and executes steps such as calling search and booking APIs.

Kim Yoo-won, CEO of Naver Cloud, announced plans to launch an inference model based on HyperCLOVA X within the first half of the year and to strengthen Naver’s sovereign AI strategy. / Photo by Kim JaeHun

이미지 확대보기Seong Nak-ho explained, “By integrating a range of APIs—for product purchases, business automation, smart home control—and diversifying capabilities such as information retrieval and data visualization, inference AI models can handle complex tasks. Unlike conventional models that require users to manually designate which tools to use, our inference model autonomously selects the most appropriate tool.”

Unlike conventional large-scale LLMs, inference AI can achieve optimal results even with lighter-weight models, offering advantages in cost and service adaptability. As such, it is emerging as a critical factor in securing AI sovereignty and profitability.

Representative examples of inference AI models include China’s DeepSeek ‘R1’, OpenAI’s ‘o3’, 'GPT-4o-Search-Preview', and Google's 'Gemini 2.5'. Domestically, LG AI Research recently released ‘EXAONE Deep’. Other ICT companies like SK Telecom are also investing heavily in this area.

Seong Nak-ho, Head of Hyperscale AI Technology at Naver Cloud, introduces the performance of the lightweight HyperCLOVA X model during the Tech Summit on April 23. / Photo by Kim JaeHun

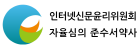

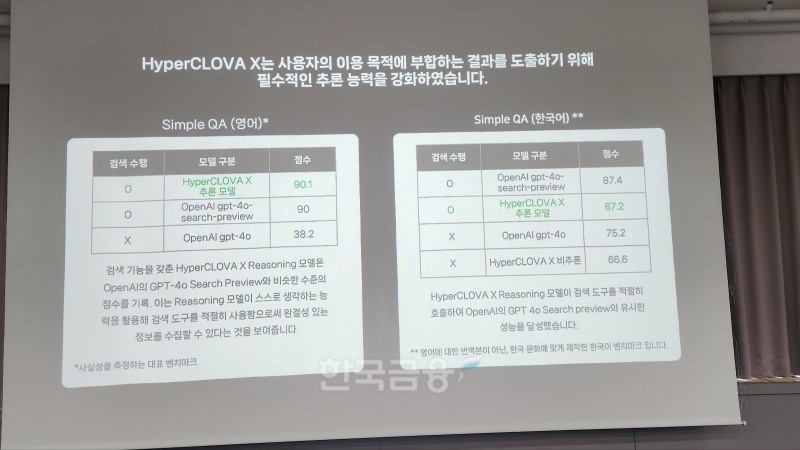

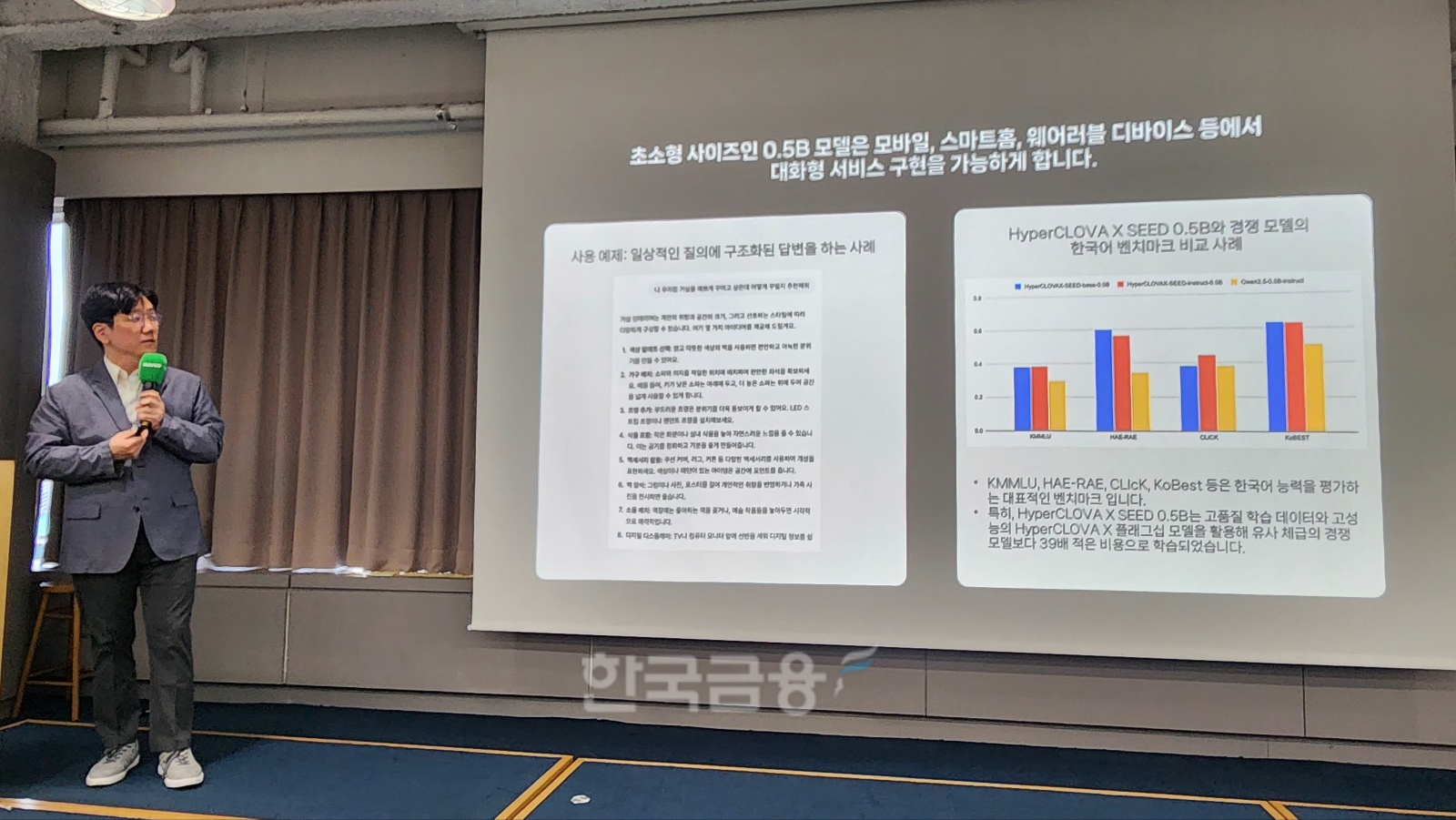

이미지 확대보기“Reasoning AI has become a core infrastructure in the AI domain. Our upcoming model matches or surpasses competitors,” said Seong. “Although based on the Korean language, our model scored 90.1 on the English benchmark SimpleQA—comparable to GPT-4o-Search-Preview at 90.0, and significantly higher than many other models.”

He further noted, “Naver Cloud’s multimodal model development capabilities are on par with leading firms like OpenAI. We are ready for commercialization pending investment.”

Naver Cloud also presented a roadmap for its inference model, expanding HyperCLOVA X’s multimodality from text to images and video, and now into voice. A new voice model is in development to support services that leverage this extension.

“The HyperCLOVA X voice model incorporates the text model’s knowledge and reasoning into the audio domain,” said Seong. “This enables expressive voice synthesis, voice style analysis, and natural bidirectional conversation. We aim to build advanced AI interaction that transitions seamlessly between text and voice.”

Benchmark comparison between Naver Cloud’s HyperCLOVA X inference model and competing inference AIs, presented at the Tech Summit on April 23. / Photo by Kim JaeHun

이미지 확대보기In addition, Naver Cloud announced the completion and open-source release of three lightweight models based on HyperCLOVA X—namely, ▲HyperCLOVA X SEED 3B, ▲HyperCLOVA X SEED 1.5B, and ▲HyperCLOVA X SEED 0.5B—enabling both domestic and international enterprises and research institutions to adapt and utilize them for business and academic purposes.

These are now available for global enterprises and research institutions to download, adapt, and apply to business or academic research. This marks the first time a commercial-grade generative AI model developed in Korea has been released as open source.

CEO Kim emphasized, “Unlike prior domestic models limited to research use, the newly released HyperCLOVA X SEED can be freely used for commercial purposes. We expect this will accelerate adoption among SMEs hesitant due to licensing or cost concerns, energizing the domestic AI ecosystem.”

Naver Cloud also reaffirmed its commitment to advancing the Sovereign AI strategy that Naver has been pursuing.

Kim Yoo-won, CEO of Naver Cloud (left), and Seong Nak-ho, Head of Hyperscale AI Technology, emphasize the superior performance of the HyperCLOVA X inference model compared to competitors at the Tech Summit on April 23. / Photo by Kim JaeHun

이미지 확대보기“Sovereign AI is a globally significant issue that no single company can achieve alone—it requires a national-level effort akin to a test of endurance,” said Kim. “Beyond securing technologies, the key lies in building a robust AI ecosystem that produces innovative services integrated into everyday life.”

He added, “Naver not only develops AI models like LLMs, but also owns the entire infrastructure including data centers, cloud platforms, and services. These initiatives will strengthen the foundation of Korea’s Sovereign AI ecosystem.”

Kim JaeHun (rlqm93@fntimes.com)

![최태원 SK 회장 '꼼수없는 경영' 어디서 배웠나 봤더니 [오너가 나온 그 대학]](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=69&h=45&m=5&simg=2025042316461703656dd55077bc212411124362.jpg&nmt=18)

![비대면 대세라지만…신한은행, 점포 감소 '최대' [은행 공정금융 점검②]](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=69&h=45&m=5&simg=2025021115324009674b4a7c6999c145616762.jpg&nmt=18)

![새마을금고중앙회, MG캐피탈에 2000억 유증…재무 안전성 강화 기대 [상호금융은 지금]](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=69&h=45&m=5&simg=2024032421433509572dd55077bc22109410526.jpg&nmt=18)

![남기문 스마일게이트인베 대표, AUM 1.5조 코앞…올해 펀드 청산으로 성과보수 유입 기대 [2024 VC 실적]](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=69&h=45&m=5&simg=2025042116092600869957e88cdd512116082156.jpg&nmt=18)

!["낮은 수익률 해결해야"…'퇴직연금 기금화' 도입 논의 깃발 [연금 통신]](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=69&h=45&m=5&simg=2025042319404701810179ad439072211389183.jpg&nmt=18)

![[카드뉴스] KT&G ‘Global Jr. Committee’, 조직문화 혁신 방안 제언](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=298&h=298&m=1&simg=202503261121571288de68fcbb3512411124362_0.png&nmt=18)

![[카드뉴스] 국립생태원과 함께 환경보호 활동 강화하는 KT&G](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=298&h=298&m=1&simg=202403221529138957c1c16452b0175114235199_0.png&nmt=18)

![[카드뉴스] 신생아 특례 대출 조건, 한도, 금리, 신청방법 등 총정리...연 1%대, 최대 5억](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=298&h=298&m=1&simg=20240131105228940de68fcbb35175114235199_0.jpg&nmt=18)

![[신간] 리빌딩 코리아 - 피크 코리아 극복을 위한 생산성 주도 성장 전략](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=81&h=123&m=5&simg=2025032814555807705f8caa4a5ce12411124362.jpg&nmt=18)

![[신간] 지속 가능 경영, 보고와 검증](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=81&h=123&m=5&simg=2025011710043006774f8caa4a5ce12411124362.jpg&nmt=18)

![[서평] 추세 매매의 대가들...추세추종 투자전략의 대가 14인 인터뷰](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=81&h=123&m=5&simg=2023102410444004986c1c16452b0175114235199.jpg&nmt=18)

![[신간] 똑똑한 금융생활...건전한 투자와 건강한 재무설계 지침서](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=81&h=123&m=5&simg=2025031015443705043c1c16452b012411124362.jpg&nmt=18)

![[카드뉴스] KT&G ‘Global Jr. Committee’, 조직문화 혁신 방안 제언](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=89&h=45&m=1&simg=202503261121571288de68fcbb3512411124362_0.png&nmt=18)

![[AD] 기아, 혁신적 콤팩트 SUV ‘시로스’ 세계 최초 공개](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=89&h=45&m=1&simg=2024123113461807771f9c516e42f12411124362.jpg&nmt=18)

![[AD] 아이오닉5 '최고 고도차 주행 전기차' 기네스북 올랐다...압도적 전기차 입증](https://cfnimage.commutil.kr/phpwas/restmb_setimgmake.php?pp=006&w=89&h=45&m=1&simg=2024123113204707739f9c516e42f12411124362.jpg&nmt=18)